Taking responsibility for complexity (section 2.5): How are problems understood? Conflicting perspectives and divergent goals

This article is section 2.5 of a series of articles featuring the ODI Working Paper Taking responsibility for complexity: How implementation can achieve results in the face of complex problems.

Complex problems and the challenges they pose. This section [Section 2] should enable the reader to assess whether their implementation challenge is in fact a ‘complex’ problem, and to identify key characteristics to mark out the appropriate tools for managing the type of complexity faced. It first describes what is meant by a complex problem, and then outlines three specific aspects of complex problems that cause problems for traditional policy implementation. It goes into detail on each of these aspects, providing explanations and ideas to help the reader identify whether their policy or programme is complex in this way (Sections 2.3-2.5).

2.5 How are problems understood? Conflicting perspectives and divergent goals

Sometimes there are many plausible but equally legitimate interpretations of a policy issue, and an array of seemingly conflicting evidence. Different groups come from different starting points or assumptions, and propose measures that set out to meet different objectives. Complex issues are messy and multidimensional, with a variety of competing perspectives and interlinked forces and trends. Decision-makers face ambiguity, where the available knowledge and information support several different interpretations at the same time: information might be contradictory and contested and it is not clear what is most relevant or how it might be interpreted. For example, setting appropriate goals for policies to reduce the negative impacts of developed country supermarkets on developing countries might be particularly difficult, due to the need to negotiate between a wide variety of dimensions: for example buying cut flowers from areas of the world which are relatively ‘dry’ may seem to be bad due to putting greater pressure on scarce water resources and diverting them from more socially pressing needs, however significant shifts in buying patterns are likely to hit small producers hard; alternatively action on labour laws may be hard to implement in the face of downward pressure on prices and competition between supermarkets.

However, programmes must be implemented even without a consensus on the goals of a policy, even though it may not be possible to tightly define the specific problem or question that policy should address. This is about how to link an intervention to the concepts, understandings and information required to make it successful, how decision-making can take place fruitfully and how to link the knowledge required to guide policy and practice. In the face of complex problems, a broad conception must be taken of ‘knowledge’ and a wide range of perspectives may be needed to properly understand the issues at hand. Different academic disciplines and different approaches to understanding problems all rely on embedded assumptions, which may be useful for looking at certain aspects of an issue but not others. More broadly, different ‘ways of knowing’ all bring different strengths and weaknesses to bear on a problem, and it is not appropriate to suggest there is any one scale of what constitutes the most ‘robust’ knowledge or ‘rigorous’ methodology for understanding problems.

Work on the links between science and society emphasises that complex policy problems require a new approach to knowledge production: moving from Mode 1 knowledge production, which is predominantly academic, investigator-initiated and discipline-based, to Mode 2, which is context-driven and problem-focused, mobilising a range of theoretical perspectives and practical methodologies1. Funtowicz and Ravetz2 argue that complex problems (which they define as being where facts are uncertain, values in dispute, stakes high and decisions urgent) require a ‘post-normal science’, with scientific experts required to share the field of knowledge production with an extended peer community (stakeholders affected by an issue and willing to enter into dialogue on it such as activist groups, think tanks, media professionals or even theologians and philosophers). Extensive action research carried out by the Australian National University (ANU) suggests that, for complex problems, five key contributors bring the knowledge necessary for long-term, constructive decisions and processes of collective governance: key individuals; the affected community; the relevant specialists; the influential organisations; and people with a shared holistic focus3.

In these contexts, the challenge of drawing on knowledge and information for decision-making cannot proceed in a mechanistic or instrumental way, but is instead interpretive. As Kuhn showed in his work on scientific revolutions4, decisions between perspectives and frameworks drawing on different underlying assumptions and values involve contextual and subjective judgements and interpretation. Looking at this issue from a more practical point of view, effective management in the face of complex problems is linked to the ability of managers to interpret information, rather than the abundance of accurate information5. Extensive empirical research on decision-making has emphasised the central role of sense making in the face of complex problems6, as a way to begin to bring together divergent discourses and perspectives.

Moreover, contrasting perspectives may well be part of the problem that must be addressed. For complex issues, action is often required by a number of actors, who may see their interests as being at loggerheads with those of others, or who may not buy into the importance of an issue (to differing degrees); conflicting actors may base their position on equally conflicting visions of the problem and its solution. However, a variety of sources (e.g. Heclo7) show that the ways people understand their own interests are not static or uniform, but rather are shaped by their experiences and expectations, and their different ideas and beliefs; and that values and beliefs are often a driving force for sustaining coalitions and catalysing action8. Promoting action and change may therefore require that these perspectives change, so people and institutions can learn and evolve in terms of the way they understand and tackle problems. For example, Ostrom9 shows that, where a set of actors have shared beliefs, norms and preferences, with a variety of direct relations and interactions, they will be more likely to work towards institutional transformations for their common good. Perceptions of shared interests, ideas and values are central to inclusive action; this highlights the importance of building social capital between actors.

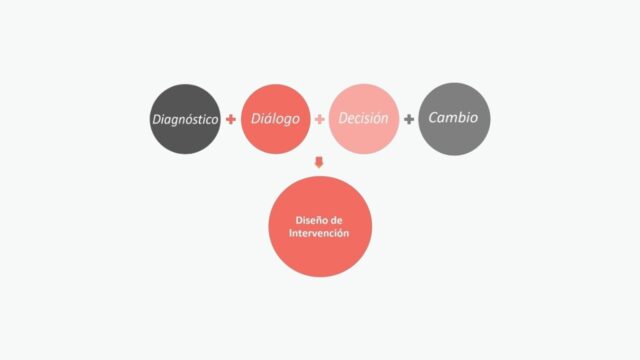

This means that different actors need to be involved in shaping the response to a problem, coming together to deliberate and negotiate understanding and action on an issue. Such deliberative processes have been shown to have transformative effects; those who are being affected by problems need to be involved in identifying the important elements of the relevant system, as well as in defining and managing their solutions10,11. Alternatively, studies of ‘deliberative democracy’ in political theory suggest that reasoned processes of fair communication and inclusive deliberation lay the foundation for public accountability12 – as such, working towards a reasoned, public account that justifies a policy is a necessary extension to representative democracy13.

These dialogues can become central elements in the change process, to resolve conflicts and build the capital required. Altering beliefs can help to ‘mobilise the power and the resources to change things’ by looking to ‘unlock resources claimed by the status quo’ 14. Opening lines of communication itself alters incentives; communication can/could lead to collaboration; and other equilibria may be found.

Unsuitability of traditional tools

Project and programme implementation, under approaches such as RBM, relies on specifying narrow sets of goals, presumed to be unambiguous and measurable in terms of a few quantitative indicators. In the face of difficult issues, assessments tend to focus on producing a number of tightly defined, narrowly focused analyses, which look in depth at specific dimensions of an issue (e.g. in the form of a series of separate assessments), generally sent to different departments for consideration and/or sign-off (e.g. environmental assessments may be considered in bulk by one department, which does not see other aspects of each proposed intervention). Reams of results indicators are produced (with differing levels of meaningfulness), based on the idea that the solution to effective management is abundant information, with decision-makers given little space or time to pay attention to interpreting it. More technically minded policy-makers and researchers often dismiss the relevance of local knowledge, too often seeing citizens affected by a problem or living in an area as requiring knowledge transfer and training, and local practices as requiring ‘modernisation,’ irrespective of the ways in which they are appropriate for local circumstances, let alone values and history15.

Research, knowledge and information are generally included as if they are a purely technical input, providing a neutral steer and instrumentally useful for meeting pre-agreed goals and questions. The ‘instrumental’ approach to knowledge production looks for academic research to summarise in a few clear action points as atomised inputs into seemingly ‘common sense’ problems, looking to ‘control out’ the role of context. For example, systematic reviews, which have become more and more popular with development agencies, frequently employ a ‘meta-narrative’ approach (with some notable exceptions), which focuses on providing answers as to which (parts of) programmes have the maximum effect on an outcome when averaged across the maximum number of contexts. This has been criticised as inappropriate in the face of complex social problems, because a number of different causal mechanisms may be operating across the different programmes, because mean outcomes are overly simplified measures and because the role of contextual factors become concealed16.

These problems are not all a result of the implementation frameworks: many argue that traditional science and research themselves are not well-equipped to deal with complex challenges, being divided into ‘silos’ and disciplines focusing on specific dimensions and aspects of societal problems17. These structures have emerged from particular approaches to analytical thinking since the 18th century, which require the division of knowledge into its constituent parts rather than paying attention to more holistic questions.

Since these approaches to providing knowledge for policy are based on underlying assumptions that are inappropriate for complex problems, they can often prove of limited relevance to design and implementation. Expensive appraisals and careful reviews of the research often capture just a narrow range of the issues at stake. Where disagreements in the evidence base are recognised (e.g. contested definitions or problems and various perspectives suggesting contrasting priorities for action), there is little guidance to help staff draw anything useful from this. If explicit attention is paid to how a problem is framed, this tends to be done informally in small circles of power, with the task perceived as having an internally consistent solution; more generally, the voices and perspectives of a variety of groups are not considered. The instrumental approach to knowledge for policy means advice is ‘decoupled’ from the theoretical and explanatory frameworks on which it relies, meaning that key assumptions are hidden, making context-sensitive policy judgements in fact harder to make18.

These tricky issues cannot be ‘wished away’: decisions on framing issues and differentiating between competing knowledge claims will always arise for complex issues, even if they are implicit or hidden. Assumptions that may seem common sense to one actor could be drastically wrong, or inappropriate for some contexts, and should be open to scrutiny and discussion. This process of sense making may otherwise be done unconsciously or implicitly by decision-makers, who (as all people are) are likely to have certain biases and assumptions, along with unquestioned beliefs and paradigms. Alternatively, it may be done in closed spaces that are not accessible by other actors who have relevant perspectives to add, which are hence excluded, such as when lobbyists target decision-makers in closed spaces in order to ‘frame’ the issue as they prefer it.

It is universally acknowledged that formal research can be only one input of many into policy decisions; decisions must be based not just on ‘scientific evidence’ – that is, knowledge gained through formal research – but must draw on a variety of factors, including values; political judgement; habits and tradition; and professional experience and expertise19. Where there is consensus here, it may be sufficient for individual decision-makers to make judgements, but not where this would be to ignore important contrasting perspectives. Technical studies may attempt to mask difficult political judgements made, but the façade is unlikely to convince all actors involved in the issue, whose buy-in to a solution is likely to be needed to ensure that intention is translated into action on the ground (e.g. Ferguson20). Moreover, in the face of controversial issues, scientific and analytical inputs are unlikely to solve the matter, as different camps simply talk ‘past’ each other, relying on different underlying assumptions and goals. Here, the use of research in policy can in fact serve to intensify the controversy, and drive conflicting camps to greater extremes21.

Next part (section 2.6): Summary – Complex problems and the challenges they pose.

See also these related series:

- Exploring the science of complexity

- Planning and strategy development in the face of complexity

- Managing in the face of complexity.

Article source: Jones, H. (2011). Taking responsibility for complexity: How implementation can achieve results in the face of complex problems. Overseas Development Institute (ODI) Working Paper 330. London: ODI. (https://www.odi.org/sites/odi.org.uk/files/odi-assets/publications-opinion-files/6485.pdf). Republished under CC BY-NC-ND 4.0 in accordance with the Terms and conditions of the ODI website.

References:

- Gibbons, M., Limoges, C., Nowotny, H., Schwartzman, S., Scott, P. and Trow, M. (1994). The New Production of Knowledge: The Dynamics of Science and Research in Contemporary Societies. London: Sage. ↩

- Funtowicz, S. and Ravetz, J. (1992). ‘Three Types of Risk Assessment and the Emergence of Postnormal Science.’ In Krimsky, S. and Golding, D. (eds) Social Theories of Risk. London: Praeger. ↩

- Brown, V. (2007). ‘Collective Decision-making Bridging Public Health, Sustainability Governance, and Environmental Management.’ in Soskolne, C. (ed.) Sustaining Life on Earth: Environmental and Human Health through Global Governance. Lanham, MD: Lexington Books. ↩

- Kuhn, T. (1962). The structure of scientific revolutions. Chicago, IL: University of Chicago Press. ↩

- Snowden, D. and Boone, M. (2007). ‘A leader’s Framework for Decision Making.’ Harvard Business Review 85(11): 68-76. ↩

- Kurtz, C.F. and Snowden, D. (2003). ‘The New Dynamics of Strategy: Sense-making in a Complex and Complicated World.’ IBM Systems Journal 42(3): 462-483. ↩

- Heclo, H. (1978). ‘Issue Networks and the Executive Establishment.’ In Kind, A. (ed.) The New American Political System. Washington, DC: American Enterprise Institute for Public Policy Research. ↩

- Sabatier, P. and Jenkins-Smith, H. (eds) (1993). Policy Change and Learning: An Advocacy Coalition Approach. Boulder, Co: Westview Press. ↩

- Ostrom, E. (1992). ‘Community and the Endogenous Solution of Commons Problems.’ Journal of Theoretical Politics 4(3): 343-352. ↩

- Funtowicz, S. and Ravetz, J. (1992). ‘Three Types of Risk Assessment and the Emergence of Postnormal Science.’ In Krimsky, S. and Golding, D. (eds) Social Theories of Risk. London: Praeger. ↩

- Röling, N. and Wagemakers, M. (eds) (1998). Facilitating Sustainable Agriculture: Participatory Learning and Adaptive Management in Times of Environmental Uncertainty. Cambridge and New York: Cambridge University Press. ↩

- Habermas, J. (1984). The Theory of Communicative Action: Reason and the Rationalization of Society. Boston: Beacon Press. ↩

- Delli Carpini, M., Cook, F. and Jacobs, L. (2004). ‘Public deliberation, discursive participation and citizen engagement: A review of the empirical literature’, Annual Review of Political Science, 7:315–44. ↩

- Westley, F., Zimmerman, B. and Quinn Patton, M. (2006). Getting to Maybe: How the World Is Changed. Toronto: Random House. ↩

- Chambers, R. (2008). Revolutions in Development Inquiry. London: Earthscan. ↩

- Pawson, R. (2002). ‘Evidence-based Policy: The Promise of “Realist Synthesis.”’ Evaluation 8(3): 340-358. ↩

- Clark, W. (2007). ‘Sustainability Science: A Room of Its Own.’ Proceedings of the National Academy of Science 104(6): 1737-1738. ↩

- Cleaver, F. and Franks, T. (2008). ‘Distilling or Diluting? Negotiating the Water Research-Policy Interface.’ Water Alternatives 1(1): 157-177. ↩

- Lomas, J., Culyer, T., McCutcheon, C., McAuley, L. and Law, S. (2005). ‘Conceptualising and Combining Evidence for Health System Guidance.’ Ottawa: Canadian Health Services Research Foundation. ↩

- Ferguson, J. (1995). The Anti-politics Machine: Development, Depoliticization, and Bureaucratic Power in Lesotho, Minneapolis: University of Minnesota Press. ↩

- van Eeten, M. (1999). ‘“Dialogues of the Deaf” on Science in Policy Controversies.’ Science and Public Policy 26(3): 185-192. ↩