Taking responsibility for complexity (section 3.2.4): Implementation as an evolutionary learning process

This article is part of section 3.2 of a series of articles featuring the ODI Working Paper Taking responsibility for complexity: How implementation can achieve results in the face of complex problems.

In the context of complex problems, and given the limits placed on planning and ex ante analysis, intervention itself becomes a crucial driver of learning (whether this is of a policy, programme or project, carried out by an international agency, national government or local people). As well as taking advantage of opportunities for learning that arise naturally (as with ‘natural experiments’ 1), learning through intervention can be done in an active manner. For example, ‘active’ adaptive management involves carrying out some interventions and perturbations of the system deliberately, in order to test hypotheses and generate a response that will shed light on how to address a problem. This is also the underlying premise of policy ‘pilots,’ whereby a proposed approach is tried out on a small scale in order to understand if it ‘works’ 2.

However, one major criticism of pilots is that too often they are not allowed to ‘fail,’ and hence they provide less opportunity for learning. The importance of ensuring that you can learn from an intervention is emphasised in Snowden’s concept of ‘safe-fail experiments’ 3: these are small interventions designed to test ideas for dealing with a problem where it is acceptable for them to fail critically. The focus, then, is on learning from a series of low-risk failures. As an approach to implementation, this involves the following steps. First, elicit ideas for tackling the problem from anyone who has one, and design safe-fail experiments to test each. Next, flesh them out, cost them and subject them to challenge and review – with the aim of keeping experiments small but carrying out a broad number of them. Crucially, for each experiment to be valid, it should be possible to observe whether results are consistent with the idea or whether the idea is proved wrong. Another suggestion is to focus not on confirming or falsifying ideas: learning comes from cognitive dissonance – seeking surprise and looking into events and outcomes that were wholly unexpected at the outset of an intervention4.

The idea of experimentation is at the centre of what is termed by some an ‘evolutionary’ approach to implementation, advocated by David Ellerman, former Chief Advisor to Joe Stiglitz at the World Bank5 and Owen Barder6. The two basic processes are variation, where a number of different options are pursued, and then selection, where, based on feedback from the environment, some are deemed a greater success and replicated.

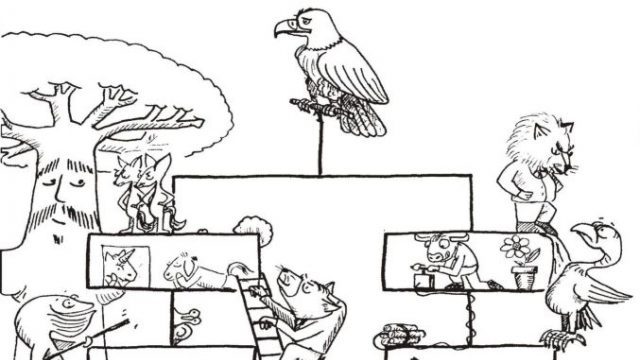

As part of an evolutionary approach, the first step is to promote variation and try many different things in response to a problem, with ‘trial and error’ as the central aim of a policy. Given the inherent uncertainty of planning, it is most appropriate to facilitate a wide variation of small-scale interventions, allowing a ‘breadth first’ approach to exploring solutions7. Research shows this kind of approach is more likely to achieve success in the long run in complex environments. Beinhocker8 argues that strategy should be seen not as a single ‘big bet’ but rather as a portfolio of experiments, by setting an overriding goal and then simultaneously pursuing a multitude of diverse sets of plans, each of which has the possibility of evolving towards that goal. This is another way for central policy-making bodies to capitalise on the effectiveness of lower levels: tackling problems by sponsoring or supporting a number of small-scale interventions; setting the most appropriate scale of intervention to be selected; but then allowing for considerable variation in how the issue is approached.

The challenge is then to carefully monitor and evaluate the effectiveness of different approaches, and to provide mechanisms to select successful characteristics, which are then the basis for the next round of evolution and variation. The main role of the central agency or top-level body would be to assist the M&E9, possibly synthesising lessons learnt across all projects and looking for what makes for successful projects in different contexts10. This could then be communicated back to the lower levels, made public and serve as a benchmark for performance in the next stage of funding – step-by-step ‘ratcheting up’ the performance of the whole11.

This kind of strategy has been used not just for social problems but also to tackle technical issues. For example, a company used these processes to develop a new nozzle for foam: a set of (random) variations on the initial prototype were tested and the most effective chosen; a set of random variations on that improved nozzle were tried and tested and the most effective chosen for further replication; and so on. Through this process, the company was able to improve the performance of the nozzle significantly, in the face of prohibitively difficult fluid dynamics. In addition, this process was far more successful than having a team of experts design the optimum nozzle12.

Next part (section 3.2.5): Creating short, cost-effective feedback loops.

See also these related series:

- Exploring the science of complexity

- Planning and strategy development in the face of complexity

- Managing in the face of complexity.

Article source: Jones, H. (2011). Taking responsibility for complexity: How implementation can achieve results in the face of complex problems. Overseas Development Institute (ODI) Working Paper 330. London: ODI. (https://www.odi.org/sites/odi.org.uk/files/odi-assets/publications-opinion-files/6485.pdf). Republished under CC BY-NC-ND 4.0 in accordance with the Terms and conditions of the ODI website.

References and notes:

- The concept of ‘natural experiments’ marks out instances in the course of policy implementation that offer particularly rich opportunities for learning, such as when two very groups very similar in many ways were and were not affected by an intervention, which gives an opportunity to estimate the effect that that intervention had (White, H., Sinha, S. and Flanagan, A. (2006). ‘A Review of the State of Impact Evaluation.’ Washington, DC: Independent Evaluation Group, World Bank.). For example, squatters outside Buenos Aires were awarded title to the land on which they were squatting, with compensation paid to the original owners. Some owners disputed the settlement in court; these squatters did not obtain the land title. Which squatters got a title or not had nothing to do with their characteristics. Hence, non-title holders and title holders can be compared to examine the impact of having title on access to credit (there was none) and investing in the home (there was some). ↩

- Cabinet Office (2003). ‘Trying it Out.’ London: Cabinet Office, Strategy Unit. ↩

- Snowden, D. (2010). ‘Safe-fail Probes.’ (https://www.cognitive-edge.com/blog/safe-fail-probes/). ↩

- Guijt, I. (2008). Seeking Surprise: Rethinking Monitoring for Collective Learning in Rural Resource Management.’ Wageningen: Wageningen University. ↩

- Ellerman, D. (2006). ‘Rethinking Development Assistance: Networks for Decentralized Social Learning.’ ↩

- Barder, O. (2010). ‘Development, Complexity and Evolution.’ ↩

- Ellerman, D. (2004). ‘Parallel Experimentation: A Basic Scheme for Dynamic Efficiency.’ Riverside, CA: University of California. ↩

- Beinhocker, E. (2006). The Origin of Wealth: Evolution, Complexity, and the Radical Remaking of Economics. Cambridge, MA: Harvard Business Press. ↩

- Monitoring & Evaluation. ↩

- Snowden, D. (2010). ‘Safe-fail Probes.’ (https://www.cognitive-edge.com/blog/safe-fail-probes/). ↩

- Ellerman, D. (2004). ‘Parallel Experimentation: A Basic Scheme for Dynamic Efficiency.’ Riverside, CA: University of California. ↩

- Barder, O. (2010). ‘Development, Complexity and Evolution.’ ↩