Judgment and decision making with unknown states and outcomes

By Michael Smithson. Originally published on the Integration and Implementation Insights blog.

What issues arise for effective judgments, predictions, and decisions when decision makers do not know all the potential starting positions, available alternatives and possible outcomes?

A shorthand term for this collection of possible starting points (also known as prior states), alternatives, and outcomes is “sample space.” Here I elucidate why sample space is important and how judgments and decisions can be influenced when it is incomplete.

Why is sample space important?

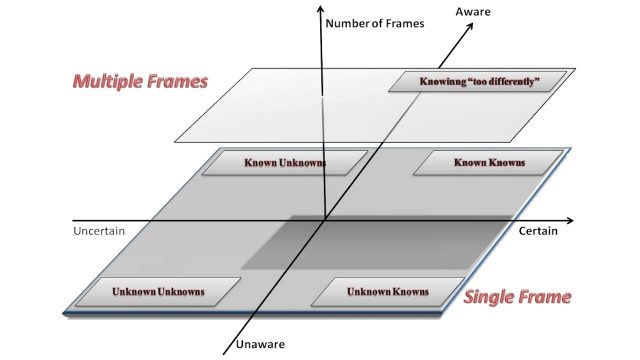

When it comes to dealing with unknowns, economists and others traditionally distinguish between “risk” (where probabilities can be assigned to every possible outcome) and “uncertainty” (where the probabilities are vague or unknown). Both of those versions of unknowns assume that decision makers know everything about the sample space.

In the real world, however, people often have to make important decisions when they don’t know everything about the relevant sample space. For example, in a pandemic the virus will mutate in ways and at times that cannot be predicted, but decisions about lock-downs and other measures have to be made based on the known variants. The current sample space for COVID-19 includes the known strains of this virus, especially the “delta” and “omicron” variants. Decisions are made with a background assumption that these are the only variants, even though another variant may already be present or emerging that has not yet been identified.

Problems with current prescriptions for dealing with sample space

Rational decision theories such as expected utility theory require that decisions are based on beliefs about the sample space but they assume that the sample is known completely. And so these theories sweep the problem of how to deal with an incompletely known sample space under the carpet. As a result, there are few prescriptions for how a sample space should be constructed, or how decisions should be made if there is no complete description of the sample space.

Moreover, where there are prescriptions, they don’t always seem to be valid. For instance, economists Karni and Vierø (2013) propose that rational agents faced with an incomplete sample space should adhere to what they call Reverse Bayes.

Suppose that a particular kind of virus has two currently known strains, A and B, and the best estimates of the proportions of cases for people who contract this kind of virus are P(A) and P(B) (where P (B) = 1 – P(A)). Now imagine that a new third strain (C) emerges. Karni and Vierø say that regardless of whatever proportion of the cases C ends up having, the ratio P(A)/P(B) should remain unchanged. For example, suppose that originally P(A) = 0.4 and P(B) = 0.6 (so that P(A)/P(B) = 2/3). If P(C) eventually turns out to be 0.5 then it should also turn out that P(A) = 0.2 and P(B) = 0.3.

Reverse Bayes is attractive, because it says that no matter what unknown elements lurk in the sample space, the probability-ratios among its known elements are fixed. Unfortunately, scholars have come up with counter-examples. For instance, what if getting strain C and recovering protects the person more against strain B than against strain A? Over time, the effects of strain C will increase the P(A)/P(B) ratio.

How people estimate the size of a sample space

In addition to the lack of guidance for how to “rationally” deal with an incomplete sample space, little research has been done on how people actually do deal with this. My colleague Yiyun Shou and I recently investigated how people estimate the size of a sample space based on what they’ve learned and believe about it so far (Smithson and Shou, 2021). It is already established that if people think that an incompletely known sample space is big, they are more likely to be cautious about making decisions based solely on the parts of the sample space they know about and we wanted to explore this further.

Our initial idea was that if people don’t have prior beliefs about the sample space then the larger the number of unique elements in the sample space they’ve seen, the larger they think the sample space will be. This is akin to the models that biologists use when estimating biodiversity in an environment based on the samples of species they have obtained—the greater the number of observed species, the higher the estimate of biodiversity.

Our experiments supported our hypothesis to some extent. When presented with a hypothetical sample of marbles drawn from a large bag of them, the greater the variety of colours in the sample (eg., 4 versus 15 colours), the larger the number of additional new colours people expected to see as more marbles were drawn from the bag. However, this didn’t hold true for the same samples if they were colours of automobiles. Most people in our experiment would have had prior beliefs about how many distinct colours of automobiles there are, and they would infer that having seen 15 of them there would not be many more left to see.

Our experiments also threw up a couple of surprises. We found that not only does a larger number of distinct elements produce larger estimates of the size of the sample space, but greater qualitative diversity among the elements can do this too. For instance, 4 versus 12 unique polygonal shapes of drinks coasters has a smaller impact on estimates of how many unique shapes there are than 4 versus 12 unique shapes consisting of polygonal and animal-figures.

Moreover, the ability to retrieve examples of sample space from memory influences estimates of its size. Thus, 4 versus 12 unique polygonal shapes had a smaller effect than 4 versus 12 unique animal shapes, because most people have a larger supply in their memory of retrievable animals than polygons.

And memory retrieval can even be influenced by priming, which is when exposure to a stimulus subconsciously influences responses to a subsequent stimulus. As Gavanski and Hui (1992) point out, priming memory to retrieve an “incorrect” sample size will render the decision maker more likely to misestimate risks and make worse decisions. In one of our experiments we asked people to estimate how many English words end in “ak”, in one of two conditions:

- those given a sample of “ak”-ending words that included words ending in “eak” and

- those whose list did not include such words.

About 78% of the most frequently used English words ending in “ak” end in “eak”. Consequently, those whose sample included the “eak” words gave larger estimates of the number of “ak”-ending words than those whose sample did not. That is, exposure to “eak”-ending words primed people’s memory searches to retrieve a larger sample space of “ak”-ending words, whereas not being exposed to “eak”-ending words primed people to retrieve more restricted sample spaces.

Where next?

Current knowledge about how people make judgments and decisions with incompletely known sample space has barely scratched the surface. Likewise, there are few effective strategies for dealing with sample space ignorance, although there is a scattered literature proposing some guidelines. For instance, Smithson and Ben-Haim (2015) recommend that decision makers should prepare to encounter more surprises than they intuitively expect, and keep sufficiently many options open to avoid premature closure. We also recommend seeking outcomes that are steerable, that have observable rather than hidden consequences, and that do not entail sunk costs, resource depletion, or high transition costs.

Thinking about your own research or practice domains, how commonplace is sample space ignorance? Under what conditions is it most likely to crop up? Are there times when it has led to poor judgments, predictions, or decisions? And do you or others have ways of dealing with incompletely known sample spaces?

References

Gavanski, I. and Hui, C. (1992). Natural sample spaces and uncertain belief. Journal of Personality and Social Psychology, 63. 5: 766-780.

Karni, E. and Vierø, M. L. (2013). Reverse Bayesianism: A choice-based theory of growing awareness. American Economic Review, 103, 7: 2790-2810.

Smithson, M. and Ben-Haim, Y. (2015). Reasoned decision making without math? Adaptability and robustness in response to surprise. Risk Analysis, 35: 1911-1918.

Smithson, M. and Shou, Y. (2021). How big is (sample) space? Judgment and decision making with unknown states and outcomes. Decision, 8, 4: 237-256.

Biography

|

Michael Smithson PhD is an Emeritus Professor in the Research School of Psychology at The Australian National University. His primary research interests are in judgment and decision making under ignorance and uncertainty, statistical methods for the social sciences, and applications of fuzzy set theory to the social sciences. |

Article source: Judgment and decision making with unknown states and outcomes.

Header image source: Dreamstime.