Models and Truth: Introduction [Systems thinking & modelling series]

This is part 34 of a series of articles featuring the book Beyond Connecting the Dots, Modeling for Meaningful Results.

“All models are wrong, but some are useful” – George E.P. Box

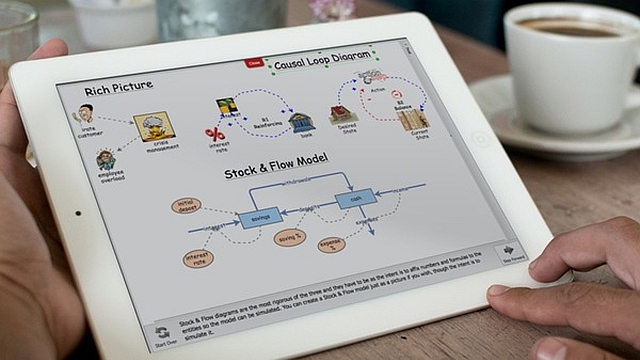

A model is a tool designed to reflect reality. A model is never a perfect mirror of reality, but often models can still be useful even with their imperfections. In this chapter, we will take a journey to explore different types of models and the distinctions commonly used to classify and understand them. We will consider several approaches to modeling that are quite different from the ones we have introduced throughout this book. These will help you understand the richer ecosystem of modeling tools and techniques and how the ones we have learned fit within this ecosystem.

The ultimate destination of this journey will be a clear understanding of the fundamental principles and approaches used to construct models. We will make many detours before arriving at this destination. In the end we will be able to divide models into two overarching categories based on their purposes and the techniques used to construct them. By mastering this divide, and how the work we and others do fits into it, we will obtain a rich perspective and understanding of the relationship between models and truth. We will also have a renewed appreciation for the strength and power of the techniques introduced in this book for tackling a wide swath of modeling problems.

Before we get there, however, let’s introduce some of the terminology commonly used to describe models. We’ll begin by taking a step back to discuss different kinds of models. Modeling is a wide-ranging field with many distinctions made by modelers and mathematicians. Three of these distinctions are presented below:

Deterministic versus Stochastic Models

There are two polar opposite views of the world. The Deterministic view says the fate of the universe is governed by strictly predictable laws of physics. In this view, the universe acts as if it were a giant machine; if its current state is known (down to each individual atomic particle), its future states through the rest of time are predetermined. The opposite (Stochastic) view is that the universe is ruled by chance and randomness. Random quantum mechanical fluctuations merge and amplify leading to an infinite range of diverging possibilities.

Which of these two views holds more of the truth? We certainly do not know and it is possible that this will be a question that physicists will never cease exploring. Albert Einstein had a viewpoint of special interest, however. He was a strong partisan of the more deterministic view, famously remarking, “God does not play dice with the world”

When creating a model of a system, we must choose how we treat chance. Do we build our model deterministically, such that each time we run it we obtain the same results? Or do we instead incorporate elements of uncertainty so that each time the model is run we may see a different trajectory of outcomes?

Mechanistic versus Statistical Models1

When beginning to build a model of a system, there are many questions you should ask, two of which are:

- Do I know (or have a hypothesis of) the mechanisms that drive the system?

- Do I have data that describe the observed behavior of the system?

If the first question is answered in the affirmative, you can build a mechanistic model that replicates your understanding (or hypothesis of) the true mechanisms in the system. If, on the other hand, the second question is answered in the affirmative, you can use statistical algorithms such as regressions to create a model of the system based purely on the data.

If neither question is answered affirmatively…well, in that case there isn’t much of anything you can build.

| Exercise 4-1 |

|---|

| A credit card company has hired you to build a model to predict defaults of new applicants. They give you a data set containing information on one million of their previous customers along with whether or not those customers ultimately defaulted.

Would it be better to build a mechanistic or statistical model for this data? |

| Exercise 4-2 |

|---|

| You have been commissioned to build a model of population growth for a herd of zebra in Namibia. You have some data on the historical size of the population of zebras but this data is limited. You also have access to more than a dozen experts who have studied zebras their whole life and have an intimate understanding of the behavior of the zebras.

Would it be better to build a mechanistic or statistical model for this data? |

Aggregated versus Disaggregated Models

When building a model, the issue of scale becomes very important. Imagine we are concerned about the effects of Global Climate Change on water resources. We may wish to examine the question of whether there will be sufficient water supplies given a rise in future temperatures.

At what scale do we build this model? The range of possible scales is wide:

- At the most aggregate, we could estimate total worldwide water demands and supplies into the future.

- Maybe that is too coarse a scale, however, as clearly having excess water in Norway has little direct impact on the situation in Egypt. We could instead create a finer resolution model that separately looked at water demand and consumption in each country.

- Even that may still be too coarse. Maybe we should make our model more granular to look at specific cities or population clusters around the globe.

- At the extreme disaggregated level, we might even want to model individual people—all 7 billion of them—and their needs and movements around the world.

There is no simple answer to this question of optimal scale. The best choice is highly context-sensitive and depends on the needs of the specific modeler and application.

| Exercise 4-3 |

|---|

| You have been hired to build a model of world population growth. What is an appropriate level of aggregation/disaggregation for this model? Does your answer change if you vary the time scale? What would be the differences between a model designed to work 10 years in the future, one designed to work for 100 years, and one designed to work for 1,000 years? |

| Exercise 4-4 |

|---|

| Your company builds rulers. You have been asked to develop a model of global demand for rulers. What is an appropriate level of aggregation/disaggregation for this model? |

Next edition: Models and Truth: Prediction, Inference, and Narrative.

Article sources: Beyond Connecting the Dots, Insight Maker. Reproduced by permission.

Header image source: Beyond Connecting the Dots.

Notes:

- This relates more broadly to the contrasting research approaches of induction and deduction. Induction starts with data and observations, which are analyzed to create a broader theory (similar to a statistical approach to modeling). Deduction starts with a theory and finishes with the collection of data to confirm the theory (similar to a more mechanistic approach to modeling). It is easy to confuse the meanings of induction and deduction; even great minds have done so. While Sir Arthur Conan Doyle’s character Sherlock Holmes attributes his impressive powers to “deduction”, he is actually using induction. Treating what we are calling “statistical” models here as a form of induction, we can also refer to them as “phenomenological” or “empirical” models. ↩