Mastering the game of Go with deep neural networks and tree search [2016’s top 100 journal articles]

Part 1 of a miniseries reviewing selected papers from the top 100 most-discussed journal articles of 2016.

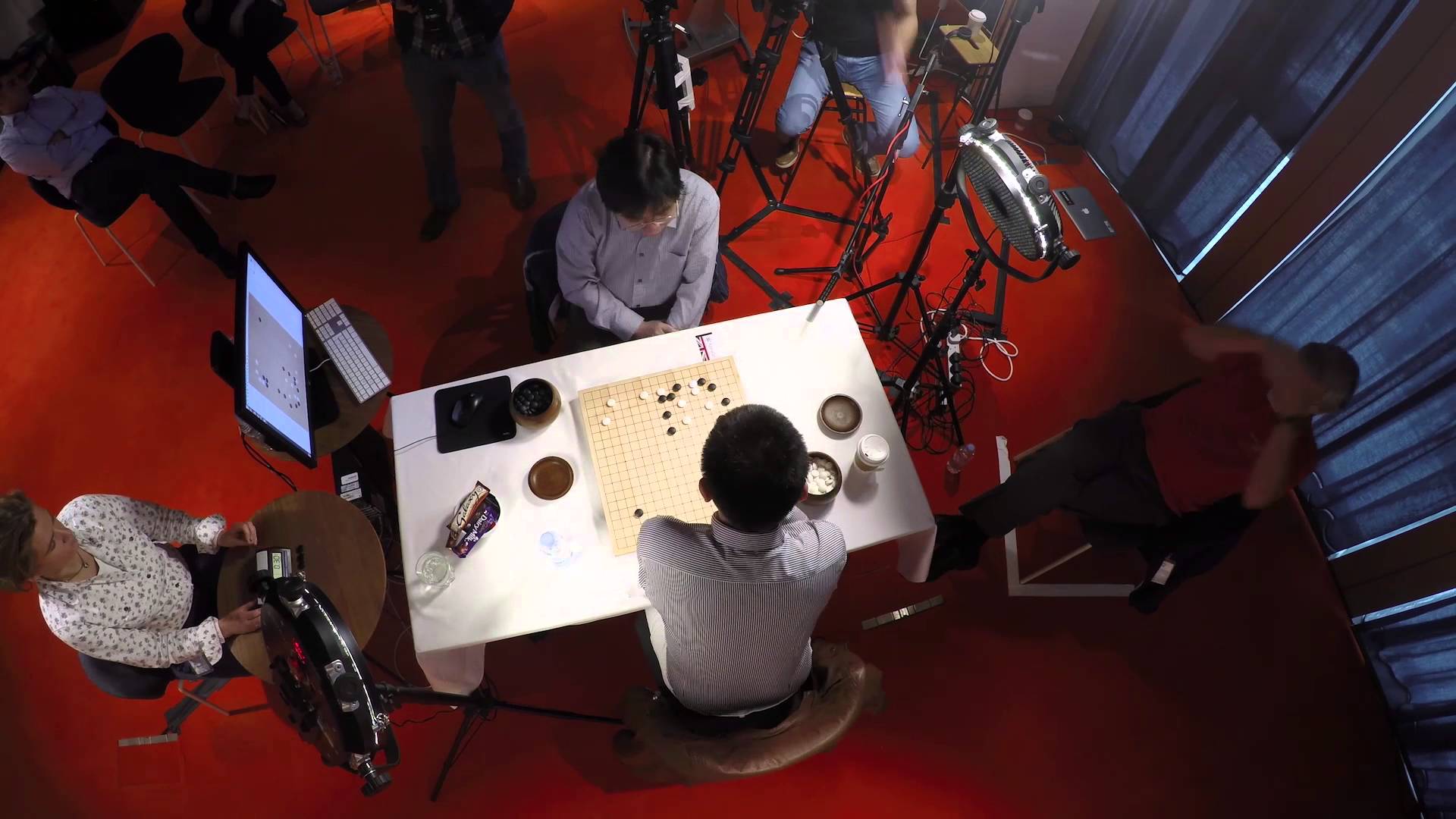

In March 2016, Google’s AlphaGo artificial intelligence (AI) program made global headlines when it beat Lee Sedol, the strongest Go player in the world, four games to one.

The ancient Chinese game of Go looks straightforward enough, with players taking turns placing black or white stones on a board, trying to capture the opponent’s stones, or surrounding empty space to make points of territory. However, as CEO and co-founder of Google DeepMind Demis Hassabis discusses, Go is actually profoundly complex, with more possible positions than the number of atoms in the universe.

The complexity of Go makes it an irresistible challenge for AI researchers, with games such as checkers and chess having been used as a testing ground since the 1950s. Hassabis advises that cracking Go required a different approach:

Traditional AI methods—which construct a search tree over all possible positions—don’t have a chance in Go. So when we set out to crack Go, we took a different approach. We built a system, AlphaGo, that combines an advanced tree search with deep neural networks. These neural networks take a description of the Go board as an input and process it through 12 different network layers containing millions of neuron-like connections. One neural network, the “policy network,” selects the next move to play. The other neural network, the “value network,” predicts the winner of the game.

We trained the neural networks on 30 million moves from games played by human experts, until it could predict the human move 57 percent of the time (the previous record before AlphaGo was 44 percent). But our goal is to beat the best human players, not just mimic them. To do this, AlphaGo learned to discover new strategies for itself, by playing thousands of games between its neural networks, and adjusting the connections using a trial-and-error process known as reinforcement learning. Of course, all of this requires a huge amount of computing power, so we made extensive use of Google Cloud Platform.

Further details can be found in Google’s paper Mastering the game of Go with deep neural networks and tree search1, which is article #9 of the top 100 most-discussed journal articles of 2016.

References:

- Silver, D., Huang, A., Maddison, C. J., Guez, A., Sifre, L., Van Den Driessche, G., … & Dieleman, S. (2016). Mastering the game of Go with deep neural networks and tree search. Nature, 529(7587), 484-489. ↩

Also published on Medium.