Building Confidence in Models: Model Design [Systems thinking & modelling series]

This is part 40 of a series of articles featuring the book Beyond Connecting the Dots, Modeling for Meaningful Results.

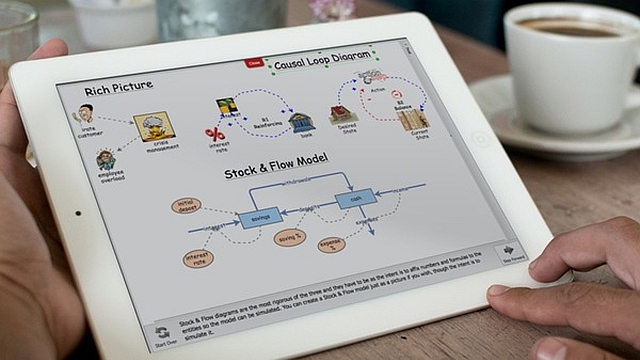

Fundamentally the design of a narrative model is of utmost importance and needs to be justified to an audience1. There are two primary aspects to a model’s design: its structure and the data used to parameterize the model.

Structure

The structure of the model should mirror the structure of the system being simulated. Depending on the system complexity, the model structure may need to carry out more or less aggregation and simplification of this reality. Nevertheless, all the primitives in the model should map to reality in a way that is understandable and relatable to the audience. Thus, if there is an object in the real system that behaves as a stock, a stock should exist in the model and should mirror the object’s position within the system. The same should hold true with the other primitives in the model. Each primitive would ideally be directly mappable onto a counterpart in the real system and any key component in the real system should be mappable onto primitives in the model. Furthermore, feedback loops that exist in the system should exist within the model. These feedback loops should be explicitly identifiable in the model and would ideally be called out or marked in a way that highlight their presence to an audience.

Furthermore, the model structure should include components that its audience thinks are important drivers of the system. Leaving out a factor that the audience considers to be a key driver can fatally discredit a model, irrespective of the performance or other qualities of the model. This is true even if the factor has a negligible effect. Generally speaking, it is much easier to include a factor an audience views as important than it is to later on convince the audience that the factor does not in really matter.

Data

The more a model uses real-world data, the more confidence an audience will have in the model. Ideally, you have empirical data to justify the value of every primitive in your model. In practice, such a goal may be a pipe dream. Indeed, for a complex model, obtaining data to parameterize every aspect is usually impossible2. When faced with model primitives that do not have parameterized empirical data, you must take measures to avoid the appearance that their values were chosen without justification or that you are leading the audience to arrive at a predetermined modeling conclusion. Sensitivity testing, as discussed later, is one way to achieve this. Another is to carry out a survey of experts in the field in order to solicit a set of recommended parameter values that can then be aggregated or used to justify the ultimate parameterization.

Peer-Review

Going through a peer-review process can be extremely useful in establishing confidence in a model. Two general types of peer-review are available. In one, the model may be incorporated into an academic journal article and submitted for publication. The article will be peer-reviewed by (generally two or three) anonymous academics in the field. These reviewers critique the article and judge its contribution to the literature, thus meriting publication. In the second type of peer-review, a committee may be assembled (hired) to review a specific model and provide conclusions and recommendations to clients.

Peer-review can be very useful in establishing the credibility of a model. A credible model is one the audience (and developer) can be more confident in, other things being equal. Conclusions drawn by an independent group of experts appear more legitimate than those of the self-interested modelers3. This can be especially useful when trying to meet some abstract standard such as that the model represents the “best available technology” or the “best available science”.

A key risk of a peer-review is, of course, that the peer-review members will find a model deficient in important respects. Good criticism can be very useful and help improve a model. However, in practice, some criticism may be nitpicking details or detrimental advice that would make the model worse if followed.

Next edition: Building Confidence in Models: Model Implementation.

Article sources: Beyond Connecting the Dots, Insight Maker. Reproduced by permission.

Header image source: Beyond Connecting the Dots.

Notes:

- This is different from predictive models where the results of the model are much more important than the design and the “proof is in the pudding” so to speak. ↩

- Leading to the clichéd conclusion of many modeling studies: “We are unable to draw strong conclusions from this modeling work. Instead, our contribution has been to show where additional data needs to be collected.” ↩

- When the peer review panel is hired by the client there is some conflict of interest, but the panel members should not be swayed by this. ↩