Teaching machines to cooperate

Originally posted on The Horizons Tracker.

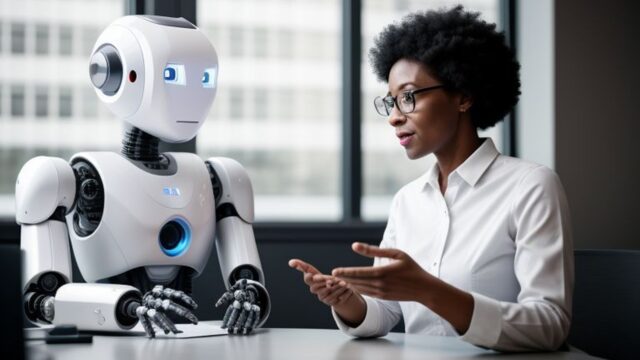

The latest generation of AI have proven themselves incredibly proficient at the kind of tasks we normally associate as being a strength of computers, but doubts remain about their ability to perform more human tasks.

It might be a small step, but a small step has indeed been made in this direction, with research1 from BYU highlighting how AI can be used to cooperate and compromise rather than compete.

“The end goal is that we understand the mathematics behind cooperation with people and what attributes artificial intelligence needs to develop social skills,” the authors say. “AI needs to be able to respond to us and articulate what it’s doing. It has to be able to interact with other people.”

Autonomous cooperation

The researchers programmed several machines with their algorithm before tasking them with playing a two-player game that tested their ability to cooperate in various relationships. The games were designed to examine a range of team designs, including machine and machine, human and machine and human and human. They wanted to test whether the machines could cooperate better than the pure human-based teams.

“Two humans, if they were honest with each other and loyal, would have done as well as two machines,” the team explain. “As it is, about half of the humans lied at some point. So essentially, this particular algorithm is learning that moral characteristics are good. It’s programmed to not lie, and it also learns to maintain cooperation once it emerges.”

The cooperative abilities of the machines were then enhanced by programming them with various ‘cheap talk’ phrases. For instance, if humans cooperated with the machine, it might respond with “sweet, we’re getting rich.” Alternatively, if the human player short changed the machine, it was capable of issuing a cuss back at them.

This seemingly irrelevant addition had a profound impact, doubling the amount of cooperation they were involved in, whilst also making it harder for human players to determine if they were playing with a man or machine. The team believe their work could have some profound implications.

“In society, relationships break down all the time,” they explain. “People that were friends for years all of a sudden become enemies. Because the machine is often actually better at reaching these compromises than we are, it can potentially teach us how to do this better.”

Is it an example of machines being able to do inherently human tasks? Probably not, but it’s an interesting development nonetheless.

Article source: Teaching Machines To Cooperate.

Header image: Sophia the Robot, Chief Humanoid, Hanson Robotics & SingularityNET, and Ben Goertzel, Chief Scientist, Hanson Robotics & SingularityNET, at a press conference during the opening day of Web Summit 2017 at Altice Arena in Lisbon. Adapted from SM5_7447 by Web Summit which is licenced by CC BY 2.0.

Reference:

- Crandall, J. W., Oudah, M., Ishowo-Oloko, F., Abdallah, S., Bonnefon, J. F., Cebrian, M., … & Rahwan, I. (2018). Cooperating with machines. Nature communications, 9(1), 233. ↩