AI learns the essence of an image dataset to create believable invented photos [Top 100 journal articles of 2019]

This article is part 4 of a series reviewing selected papers from Altmetric’s list of the top 100 most-discussed scholarly works of 2019.

Generative adversarial networks (GANs)

Generative adversarial networks (GANs) are an exciting recent innovation in machine learning. GANs are generative models: they create new data instances that resemble your training data. For example, GANs can create images that look like photographs of human faces, even though the faces don’t belong to any real person. [Google Developers]

What does this mean for knowledge management?

Because GANs can facilitate the creation of realistic images of people’s faces that aren’t actually real, they are potentially useful in knowledge management (KM) for the creation of avatars for personas1, chatbots, or gamification.

However, such images could also potentially be used in dark side KM tactics2, just like the deepfake videos that are discussed in part 2 of this series.

New GAN generator improves the state-of-the-art

The #5 most discussed article3 in Altmetric’s top 100 list for 2019 shows how rapid advances in machine learning technology have facilitated the development of a new GAN generator that is much more effective.

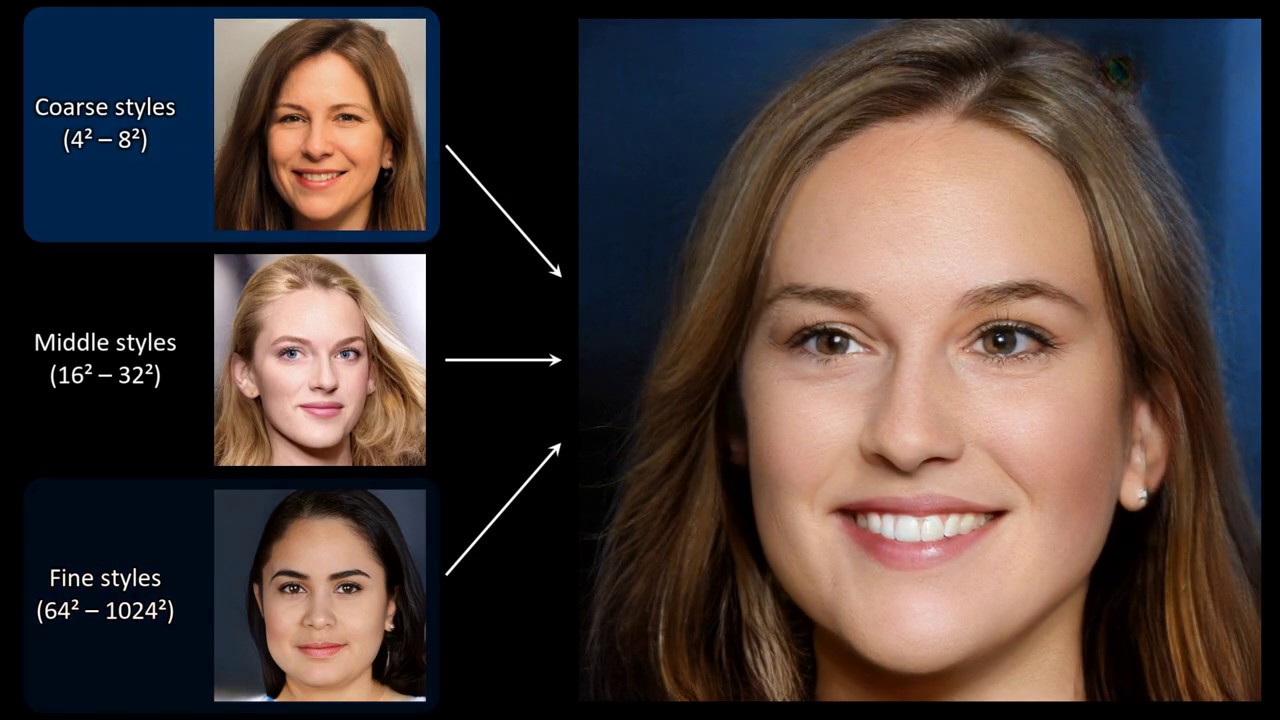

The new generator has been developed by a team from American technology company NVIDIA. As shown in the video above and summarised in the following abstract, the generator automatically learns to separate different aspects of images without any human supervision. After this training, the different aspects can then be combined in any way that is desired. As well as being used to create realistic images of people’s faces that aren’t actually real, the new generator has been successfully applied to datasets of bedrooms, cars, and cats.

As well as the generator, an output from the NVIDIA research has been the collection of a new high-quality dataset of human faces that was used in training the generator.

Author abstract

We propose an alternative generator architecture for generative adversarial networks, borrowing from style transfer literature. The new architecture leads to an automatically learned, unsupervised separation of high-level attributes (e.g., pose and identity when trained on human faces) and stochastic variation in the generated images (e.g., freckles, hair), and it enables intuitive, scale-specific control of the synthesis. The new generator improves the state-of-the-art in terms of traditional distribution quality metrics, leads to demonstrably better interpolation properties, and also better disentangles the latent factors of variation. To quantify interpolation quality and disentanglement, we propose two new, automated methods that are applicable to any generator architecture. Finally, we introduce a new, highly varied and high-quality dataset of human faces.

References:

- Maier, R., & Thalmann, S. (2010). Using personas for designing knowledge and learning services: results of an ethnographically informed study. International Journal of Technology Enhanced Learning, 2(1-2), 58-74. ↩

- Alter, S. (2006, January). Goals and tactics on the dark side of knowledge management. In Proceedings of the 39th Annual Hawaii International Conference on System Sciences (HICSS’06) (Vol. 7, pp. 144a-144a). IEEE. ↩

- Karras, T., Laine, S., & Aila, T. (2019). A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 4401-4410). ↩

Also published on Medium.