Radicalisation pathways and YouTube’s recommendation algorithms [Top 100 journal articles of 2019]

This article is part 10 of a series reviewing selected papers from Altmetric’s list of the top 100 most-discussed scholarly works of 2019.

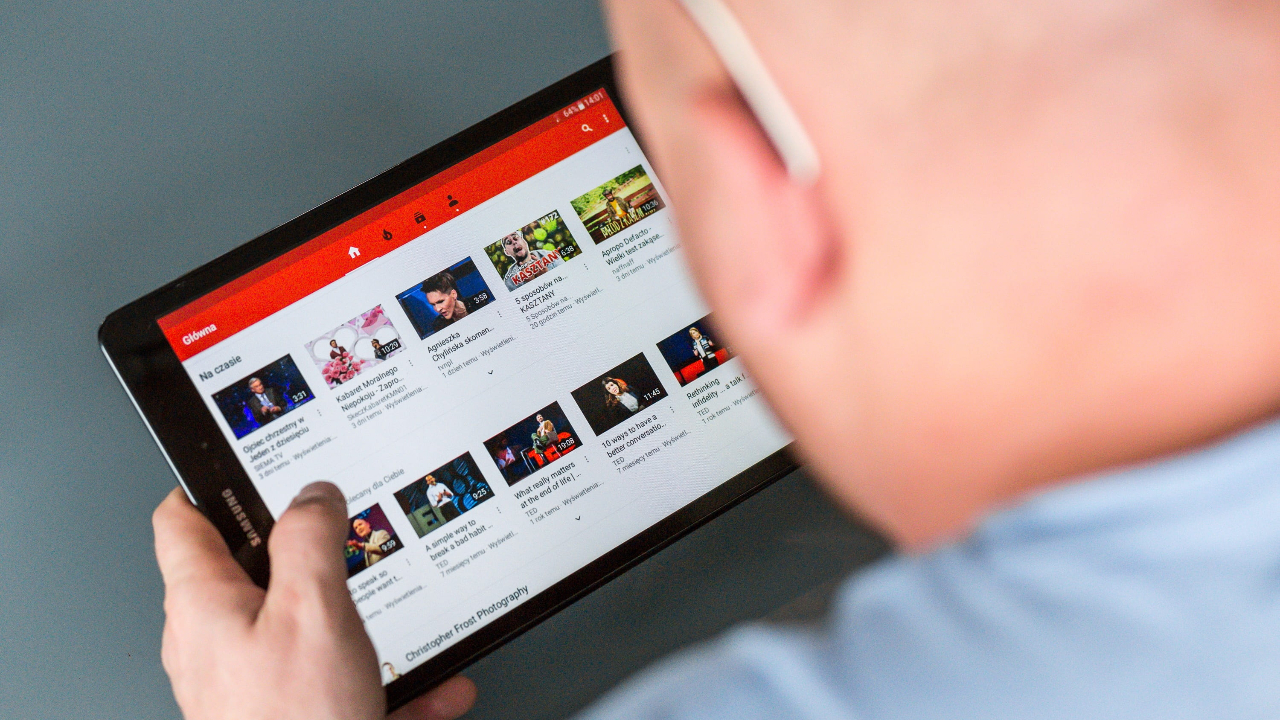

YouTube is currently the second most popular website in the world, behind top-ranked Google (which owns YouTube). YouTube has unique appeal1 for young people, and has become a key source of news2 for them.

However, YouTube has faced criticism for aspects of the content that it allows on its site, including offensive material, the promotion of conspiracy theories, and radical far-right views. One concern that has been widely expressed is that YouTube takes people down radicalisation pathways, where users looking at benign content are pushed in the direction of radical and inflammatory right-wing content by YouTube’s recommendation algorithms.

The #89 most discussed article3 in Altmetric’s top 100 list for 2019 set out to investigate this concern. The research involved the analysis of a dataset covering the past decade, with more than 79 million comments on 331,849 videos in 360 YouTube channels, and with more than 2 million video and 10,000 channel recommendations.

Three YouTube communities were studied:

- Alt-right, which is a “loose segment of the white supremacist movement consisting of individuals who reject mainstream conservatism in favor of politics that embrace racist, anti-Semitic and white supremacist ideology.”

- Alt-lite, which was “created to differentiate right-wing activists who deny … embracing white supremacist ideology.”

- The Intellectual Dark Web (I.D.W.), which “is a term coined by Eric Ross Weinstein to refer to a particular group of academics and podcast hosts” who put forward often controversial views in regard to a range of popular topics.

The results of the research indicate that there are indeed radicalisation pathways on YouTube. As shown in the abstract below, the study found that:

- Since 2015, the three communities studied have had massive increases in numbers of views, likes, videos published, and comments.

- A significant number of commenting users systematically migrate from commenting exclusively on milder content to commenting on more extreme content. The study authors argue that this finding constitutes significant evidence that there has been, and continues to be, user radicalization on YouTube, and that their analyses is consistent with the theory that more extreme content has “piggybacked” the surge in popularity of I.D.W. and Alt-lite content.

- It appears that YouTube’s recommendation algorithms frequently suggest Alt-lite and I.D.W. content. From these two communities, it is possible to find Alt-right content from recommended channels, but not from recommended videos.

What does this mean for knowledge management?

Respected knowledge management (KM) community member Euan Semple has long raised concerns in regard to the extent to which algorithms manipulate public sentiment. He states that:

The patterns that we leave online are interpreted – and, controversially, manipulated – by algorithms. Mathematical equations that take data, assign “meaning” to that data, and then take action on the basis of that process … [I use] the phrase “the ideology of algorithms” to express the significance of this increasingly powerful capability.

The YouTube radicalisation pathways study provides evidence in support of the existence of this ideology, and the negative impacts that can result from it.

In response to the issues he raises, Semple recommends that we need open public discussion in regard to the future role of algorithms. He also argues that:

we all need to take responsibility for moving the arguments on, making sure that we expect the highest standards from our legislators, politicians, and technologists. We can no longer afford to be passive consumers.

People need to take more responsibility for their information environment. They need to be more aware of the risks of bias. They need to be more critical in their thinking. They need to think harder about the sources of the information that they are reading. They need to be more responsible about what they are sharing and why. Collectively we need to grasp the situation and not run away from it.

As we’ve previously proposed in RealKM Magazine, the KM community can and should play a leading role in this regard, with a recent paper4 looking at knowledge management and leadership stating that “the validation of knowledge is getting more important. Managing knowledge on a process level is not enough.”

Author abstract

Non-profits and the media claim there is a radicalization pipeline on YouTube. Its content creators would sponsor fringe ideas, and its recommender system would steer users towards edgier content. Yet, the supporting evidence for this claim is mostly anecdotal, and there are no proper measurements of the influence of YouTube’s recommender system. In this work, we conduct a large scale audit of user radicalization on YouTube. We analyze 331,849 videos of 360 channels which we broadly classify into: control, the Alt-lite, the Intellectual Dark Web (I.D.W.), and the Alt-right – channels in the I.D.W. and the Alt-lite would be gateways to fringe far-right ideology, here represented by Alt-right channels. Processing more than 79M comments, we show that the three communities increasingly share the same user base; that users consistently migrate from milder to more extreme content; and that a large percentage of users who consume Alt-right content now consumed Alt-lite and I.D.W. content in the past. We also probe YouTube’s recommendation algorithm, looking at more than 2 million recommendations for videos and channels between May and July 2019. We find that Alt-lite content is easily reachable from I.D.W. channels via recommendations and that Alt-right channels may be reached from both I.D.W. and Alt-lite channels. Overall, we paint a comprehensive picture of user radicalization on YouTube and provide methods to transparently audit the platform and its recommender system.

Header image source: Pikrepo, Public Domain.

References:

- Pereira, S., Moura, P. F. R. D., & Fillol, J. (2018). The YouTubers phenomenon: what makes YouTube stars so popular for young people? Fonseca, Journal of Communication, 17, 107-123. ↩

- Media Insight Project. (2015). How Millennials Get News: Inside the Habits of America’s First Digital Generation. American Press Institute and Associated Press-NORC Center for Public Affairs Research. ↩

- Ribeiro, M. H., Ottoni, R., West, R., Almeida, V. A., & Meira, W. (2019). Auditing radicalization pathways on youtube. arXiv preprint arXiv:1908.08313. ↩

- Winkler, K., & Wagner, B. (2018). The relevance of knowledge management in the context of leadership. Journal of Applied Leadership and Management, 5(1). ↩

Also published on Medium.