How can we know unknown unknowns?

By Michael Smithson. Originally published on the Integration and Implementation Insights blog.

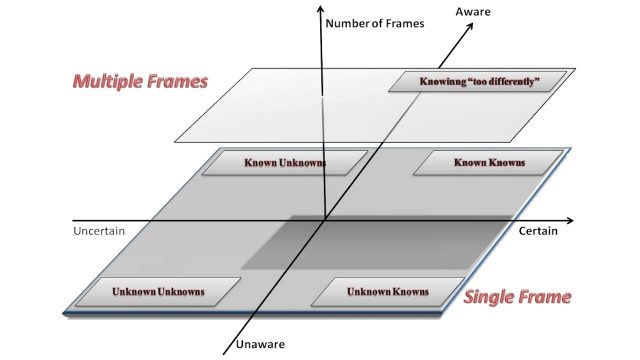

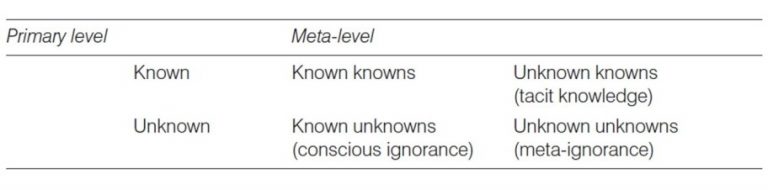

In a 1993 paper1, philosopher Ann Kerwin elaborated a view on ignorance that has been summarized in a 2×2 table describing crucial components of metacognition (see figure below). One margin of the table consisted of “knowns” and “unknowns”. The other margin comprised the adjectives “known” and “unknown”. Crosstabulating these produced “known knowns”, “known unknowns”, “unknown knowns”, and unknown unknowns”. The latter two categories have caused some befuddlement. What does it mean to not know what is known, or to not know what is unknown? And how can we convert either of these into their known counterparts?

Attributing ignorance

To begin, no form of ignorance can be properly considered without explicitly tracking who is attributing it to whom. With unknown unknowns, we have to keep track of three viewpoints: the unknower, the possessor of the unknowns, and the claimant (the person making the statement about unknown unknowns). Each of these can be oneself or someone else.

Various combinations of these identities generate quite different states of (non)knowledge and claims whose validities also differ. For instance, compare:

- A claims that B doesn’t know that A doesn’t know X

- B claims that A doesn’t know that A doesn’t know X

- A claims that A doesn’t know that B doesn’t know X

- A claims that A doesn’t know that A doesn’t know X

The first two could be plausible claims, because the claimant is not the person who doesn’t know that someone doesn’t know X. The last two claims, however, are problematic because they require self-insight that seems unavailable. How can I claim I don’t know that I don’t know X? The nub of the problem is self-attributing false belief. I am claiming one of two things. First, I may be saying that I believe I know X, but my belief is false. This claim doesn’t make sense if we take “belief” in its usual meaning; I cannot claim to believe something that I also believe is false. The second possible claim is that my beliefs omit the possibility of knowing X, but this omission is mistaken. If I’m not even aware of X in the first place, then I can’t claim that my lack of awareness of X is mistaken.

Current unknown unknowns would seem to be claimable by us only about someone else, and therefore current unknown unknowns can be attributed to us only by someone else. Straightaway this suggests one obvious means to identifying our own unknown unknowns: Cultivate the company of people whose knowledge-bases differ sufficiently from ours that they are capable of pointing out things we don’t know that we don’t know. However, most of us don’t do this—Instead the literature on interpersonal attraction and friendships shows that we gravitate towards others who are just like us, sharing the same beliefs, values, prejudices, and therefore, blind-spots.

Different kinds of unknown unknowns

There are different kinds of unknown unknowns, each requiring different “remedies”. The distinction between matters that we mistakenly think that we know about, versus matters that we’re unaware of altogether, is probably the most important distinction among types of unknown unknowns. Its importance stems from the fact that these two kinds have different psychological impacts when they are attributed to us and require different readjustments to our view of the world.

1. False convictions

A shorthand term for the first kind of unknown unknown is a “false conviction”. This can be a matter of fact that is overturned by a credible source. For instance, I may believe that tomatoes are a vegetable but then learn from my more botanically literate friend that its cladistic status is a fruit. Or it can be an assumption about one’s depth of knowledge that is debunked by raising the standard of proof—I may be convinced that I understand compound interest, but then someone asks me to explain it to them and I realize that I can’t provide a clear explanation.

What makes us vulnerable to false convictions? A major contributor is over-confidence about our stock of knowledge. Considerable evidence has been found for the claim that most people believe that they understand the world in much greater breadth, depth, and coherence than they actually do. In a 2002 paper4 psychologists Leonid Rozenblit and Frank Keil coined a phrase to describe this: the “illusion of explanatory depth”. They found that this kind of overconfidence is greatest in explanatory knowledge about how things work, whether in natural processes or artificial devices. They also were able to rule out self-serving motives as a primary cause of the illusion of explanatory depth. Instead, illusion of explanatory depth arises mainly because our scanty knowledge-base gets us by most of the time, we are not called upon often to explain our beliefs in depth, and even if we intend to check them out, the opportunities for first-hand testing of many beliefs are very limited. Moreover, scanty knowledge also limits the accuracy of our assessments of our own ignorance—greater expertise brings with it greater awareness of what we don’t know.

Another important contributor is hindsight bias, the feeling after learning about something that we knew it all along. In the 1970’s cognitive psychologists such as Baruch Fischhoff ran experiments asking participants to estimate the likelihoods of outcomes of upcoming political events. After these events had occurred or failed to occur, they then were asked to recall the likelihoods that they had assigned. Participants tended to over-estimate how likely they had thought an event would occur if the event actually happened.

Nevertheless, identifying false convictions and ridding ourselves of them is not difficult in principle, providing that we’re receptive to being shown to be wrong and are able to resist hindsight bias. We can self-test our convictions by checking their veracity via multiple sources, by subjecting them to more stringent standards of proof, and by assessing our ability to explain the concepts underpinning them to others. We can also prevent false convictions by being less willing to leap to conclusions and more willing to suspend judgment.

2. Unknowns we aren’t aware of at all

Finally, let’s turn to the second kind of unknown-unknown, the unknowns we aren’t aware of at all. This type of unknown unknown gets us into rather murky territory. A good example of it is denial, which we may contrast with the type of unknown unknown that is merely due to unawareness. This distinction is slightly tricky, but a good indicator is whether we’re receptive to the unknown when it is brought to our attention. A climate-change activist whose friend is adamant that the climate isn’t changing will likely think of her friend as a “climate-change denier” in two senses: he is denying that the climate is changing and also in denial about his ignorance on that issue.

Can unknown unknowns be beneficial or even adaptive?

One general benefit simply arises from the fact that we don’t have the capacity to know everything. The circumstances and mechanisms that produce unknown unknowns act as filters, with both good and bad consequences. Among the good consequences is the avoidance of paralysis—if we were to suspend belief about every claim we couldn’t test first-hand we would be unable to act in many situations. Another benefit is spreading the risks and costs involved in getting first-hand knowledge by entrusting large portions of those efforts to others.

Perhaps the grandest claim for the adaptability of denial was made by Ajit Varki and Danny Brower in their book on the topic5. They argued that the human capacity for denial was selected (evolutionarily) because it enhanced the reproductive capacity of humans who had evolved to the point of realising their own mortality. Without the capacity to be in denial about mortality, their argument goes, humans would have been too fearful and risk-averse to survive as a species. Whether convincing or not, it’s a novel take on how humans became human.

Antidotes

Having taken us on a brief tour through unknown unknowns, I’ll conclude by summarizing the “antidotes” available to us.

- Humility. A little over-confidence can be a good thing, but if we want to be receptive to learning more about what we don’t know that we don’t know, a humble assessment of the little that we know will pave the way.

- Inclusiveness. Consulting others whose backgrounds are diverse and different from our own will reveal many matters and viewpoints we would otherwise be unaware of.

- Rigor. Subjecting our beliefs to stricter standards of evidence and logic than everyday life requires of us can quickly reveal hidden gaps and distortions.

- Explication. One of the greatest tests of our knowledge is to be able to teach or explain it to a novice.

- Acceptance. None of us can know more than a tiny fraction of all there is to know, and none of us can attain complete awareness of our own ignorance. We are destined to sail into an unknowable future, and accepting that makes us receptive to surprises, novelty, and therefore converting unknown unknowns into known unknowns. That unknowable future is not just a source of anxiety and fear, but also the font of curiosity, hope, aspiration, adventure, and freedom.

What are your thoughts?

You’re invited to add your comments to the discussion on the Integration and Implementation Insights blog.

Biography

|

Michael Smithson PhD is a Professor in the Research School of Psychology at The Australian National University. His primary research interests are in judgment and decision making under ignorance and uncertainty, statistical methods for the social sciences, and applications of fuzzy set theory to the social sciences. |

Article source: How can we know unknown unknowns?, republished by permission.

This blog post is part of a series on unknown unknowns as part of a collaboration between the Australian National University and Defence Science and Technology.

Blog posts in the series:

- Accountability and adapting to surprises by Patricia Hirl Longstaff

- What do you know? And how is it relevant to unknown unknowns? by Matthew Welsh (which will shortly be republished in RealKM Magazine).

- Managing innovation dilemmas: Info-gap theory by Yakov Ben-Haim

References:

- Kerwin, A. (1993). None too solid: Medical ignorance. Knowledge, 15, 2: 166-185 ↩

- Kerwin, A. (1993). None too solid: Medical ignorance. Knowledge, 15, 2: 166-185 ↩

- Bammer G., Smithson, M. and the Goolabri Group. (2008). The nature of uncertainty. In, G. Bammer and Smithson, S. (eds.), Uncertainty and Risk: Multi-Disciplinary Perspectives, Earthscan: London, United Kingdom: 289-303. ↩

- Rozenblit, L. and Keil, F. (2002). The misunderstood limits of folk science: An illusion of explanatory depth. Cognitive Science, 26, 5: 521-562. ↩

- Varki, A. and Brower, D. (2013). Denial: Self-deception, false beliefs, and the origins of the human mind. Hachette: London, United Kingdom. ↩