Getting to the heart of the problems with Boeing, Takata, and Toyota (part 1): The situational awareness knowledge challenge

This four-part series looks at what recent fatal Boeing 737 MAX aircraft crashes have in common with the Toyota and Takata automotive recall scandals, and proposes a solution.

In July 2013, 16-year-old Pablo Garcia was admitted to the Benioff Children’s Hospital of the highly respected University of California San Francisco (UCSF) Medical Center for what should have been a routine colonoscopy examination. He would end up fighting for his life in a clinical emergency, the result of hospital staff giving hm 38½ times the required dose of the common antibiotic Septra.

How could such a life-threatening mistake have occurred? The four-part Wired series The Overdose chronicles how a critical error was made in using the hospital’s computer system. When ordering Pablo Garcia’s medication, Jenny Lucca, a pediatrics resident at UCSF, thought that she was ordering one 160 mg double-strength Septra tablet, but had actually requested a dosage of 160 mg per kg of body weight, which was not one double-strength Septra, but 38½ of them!

The Wired series goes on to document how the overdose was caused by complexity at the interface of technology and people, stating that:

The clinicians involved in Pablo’s case that day … made small errors or had mistaken judgments that contributed to their patient’s extraordinary overdose. Yet it was the computer systems, and the awkward and sometimes unsafe ways that they interact with busy and fallible human beings, that ultimately were to blame.

When Jenny Lucca incorrectly ordered Pablo Garcia’s medication, she had ignored computer alerts because these alerts were endless and often meaningless. Because of this, author Bob Wachter suggests in the final article of the Wired series that a significant contributor to the complexity was the hospital’s incessant electronic alerts, and asks if medicine might be able to learn lessons from the aviation industry in this regard.

In response to advice from Captain Chesley “Sully” Sullenberger, the famed “Miracle on the Hudson” pilot, Wachter visited Boeing’s headquarters to see how cockpit alerts are managed, reporting that:

I spent a day in Seattle with several of the Boeing engineers and human factors experts responsible for cockpit design in the company’s commercial fleet. “We created this group to look across all the different gauges and indicators and displays and put it together into a common, consistent set of rules,” Bob Myers, chief of the team, told me. “We are responsible for making sure the integration works out.”

But does Boeing really make sure of this?

In response to the idea that medicine might be able to learn lessons from the aviation industry in regard to automated warnings, let’s fast forward to recent comments by Boeing CEO Dennis Muilenburg.

Muilenburg has admitted that Boeing made a mistake in handling problems with a cockpit warning system in its new 737 MAX aircraft before two shocking crashes that have killed 346 people – Lion Air Flight 610 and Ethiopian Airlines Flight 302.

In the wake of the crashes, the United States (U.S.) Federal Aviation Administration (FAA) has issued an Emergency Order of Prohibition that prohibits the operation of Boeing 737 MAX aircraft by U.S.-certificated operators or in U.S. territory. An associated international airworthiness notification has resulted in the grounding of the 737 MAX worldwide.

The Emergency Order of Prohibition remains in effect while the FAA carries out what it describes as a rigorous safety review of the software enhancement package that Boeing has submitted in response to the crashes. This past week, the FAA has released a statement announcing that as part of the software review it had found a further potential risk that Boeing must mitigate, and this is expected to extend the period of grounding. In a related media release, Boeing has stated that addressing this risk “will reduce pilot workload by accounting for a potential source of uncommanded stabilizer motion.”

The 737 MAX grounding and Boeing’s admissions of failure have sent confidence in the aircraft manufacturer plummeting, with airlines worldwide cancelling orders and numerous investigations and lawsuits launched.

Aviation certainly does have a much better safety record than healthcare, and research also shows that there are safety lessons that medicine can learn from aviation, even though aviation and healthcare have a number of quite distinctive features. For example, a 2015 comparative review1 has “recommended that healthcare should emulate aviation in its resourcing of staff who specialise in human factors and related psychological aspects of patient safety and staff well-being.”

However, as the Boeing 737 MAX situation shows, the aviation industry isn’t a utopian safety wonderland. Despite all that’s been done to improve safety in aviation, one of the world’s leading aircraft manufacturers has admitted to failures that have contributed to the deaths of 346 people.

So what’s still missing in medicine and aviation?

A 2006 study2 that also looked at what medicine can learn from aviation suggests that the situational awareness model has potential application in medicine. The study authors advise that:

Situational awareness (SA) is defined as a person’s perception of the elements in the environment within a volume of space and time, the comprehension of their meaning, and the projection of their status in the near future. In essence, SA is a shared understanding of “what’s going on” and “what is likely to happen next”. It is a critical concept in any field that involves complex and dynamic systems where safety is a priority, such as aviation. Indeed, problems with SA have been found to be a leading cause of aviation mishaps. In a study of accidents among major airlines, 88% of those involving human errors could be attributed to problems with SA.

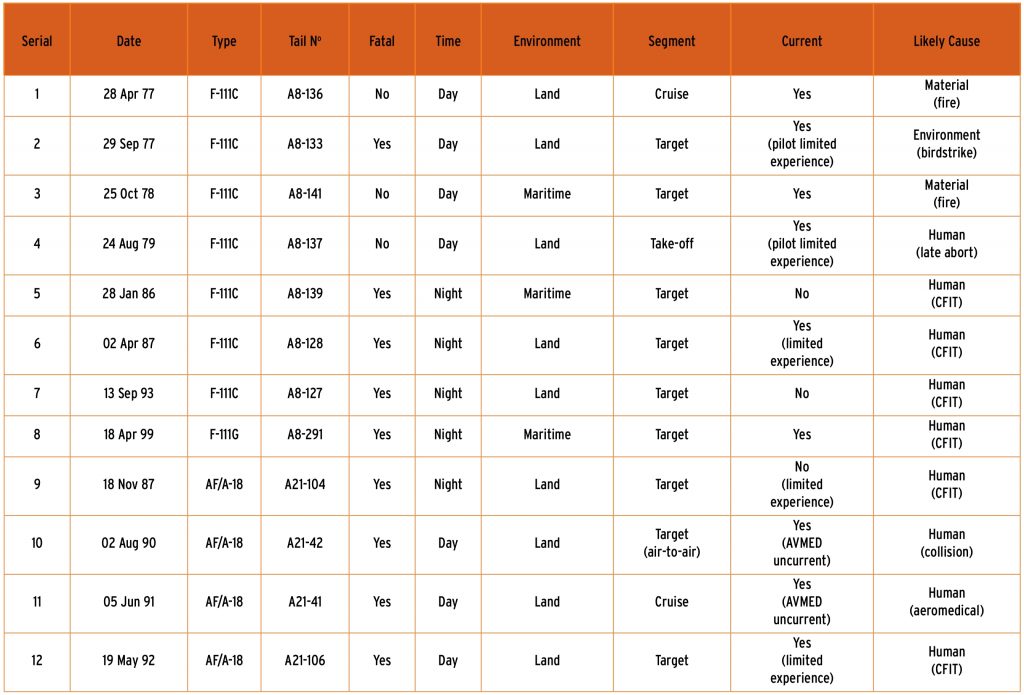

The importance of situational awareness can be clearly seen in the findings of a report3 investigating Royal Australian Air Force (RAAF) F-111 and AF/A-18 military aircraft accidents between 1972 and 1992, as listed in Figure 1. I personally worked on RAAF F-111 aircraft as an avionics technician between 1985 and 1991, including three of those in the list. The loss of one of those aircraft, A8-139, happened the day after I’d worked on it, killing both aircrew. Two families were left devastated, and like everyone in the squadron, I had to try to come to terms with the death of two greatly valued colleagues.

The report conclusion states that:

Nearly all of the RAAF F-111 and AF/A-18 fatal accidents that have occurred to date can be attributed to some extent to crews not being fully aware of the situation or the environment around them.

Four of the five F-111 and two of the four AF/A-18 fatal accidents could be classified as CFIT [controlled flight into terrain] — these CFIT accidents (apart from one of the AF/A-18 accidents) were at night. Additionally, all of these accidents have been in the target area (or area of engagement). This is where crew workload is at its highest level, and in the case of multi-crewed aircraft, where crew communication and co-ordination tends to break down.

Any distraction, or the planned events not going as expected, can have dire consequences, particularly when operating close to the ground where there is little margin for error.

The preliminary report4 into the crash of Ethiopian Airlines Flight 302 reveals that planned events soon after takeoff certainly didn’t go as expected, with very dire consequences.

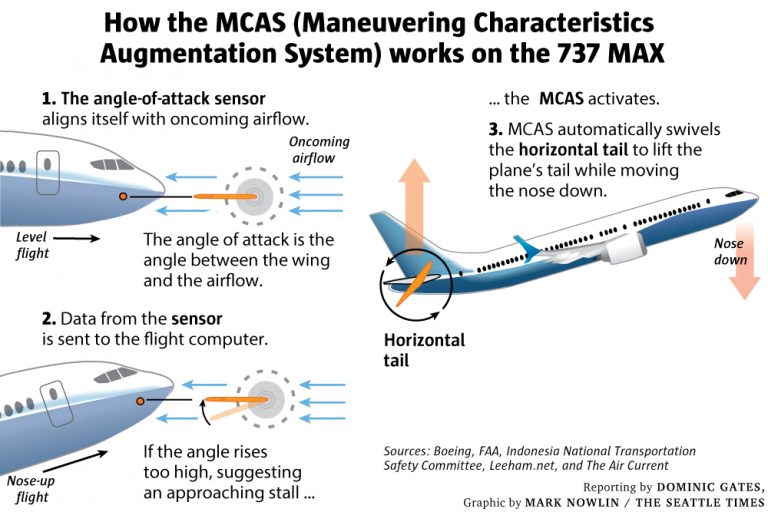

Did the pilots have adequate situational awareness? The preliminary report reveals that shortly after takeoff, the aircraft’s left angle of attack sensor started to send erroneous readings, and the pilots experienced flight control problems. In attempting to deal with the flight control problems, the pilots were found to have followed the procedures in Boeing’s 737 flight manual. Most significant among the problems was “an automatic aircraft nose down (AND) trim command four times without pilot’s input.” The pilots struggled to try to overcome these commands, but were unable to do so resulting in the aircraft crashing.

The preliminary report shows that the pilots appear to have been as situationally aware as they could possibly have been. This is because Boeing hadn’t told them about the new system that was causing the automatic aircraft nose down trim command in response to the erroneous angle of attack signals – a system called the Maneuvering Characteristics Augmentation System (MCAS). As shown in Figure 2, the MCAS was deployed to address the risk of the aircraft stalling because of the tendency for 737 MAX aircraft to pitch upwards as a result of the engines being located in a different position to previous 737 models. Amid the chaotic scenes in the cockpit, the pilots didn’t realise that taking actions that would have disabled the MCAS would have solved the automatic aircraft nose down problem, because they didn’t know that the MCAS existed.

The situational awareness knowledge challenge

Disturbingly, the MCAS had also been implicated in the earlier crash of Lion Air Flight 610, with Boeing CEO Dennis Muilenburg stating after the release of the Ethiopian Airlines Flight 302 preliminary report that:

The full details of what happened in the two accidents will be issued by the government authorities in the final reports, but, with the release of the preliminary report of the Ethiopian Airlines Flight 302 accident investigation, it’s apparent that in both flights the Maneuvering Characteristics Augmentation System, known as MCAS, activated in response to erroneous angle of attack information.

After the crash of Lion Air Flight 610 and before the crash of Ethiopian Airlines Flight 302, angry American Airlines pilots had confronted Boeing in regard to the lack of information in regard to the MCAS, as revealed in the following recording5:

Rather than showing that Boeing deliberately sought to hide vital information from pilots, as some have claimed, I argue that the exchange in the recording alerts to the very significant challenge in providing the right amount of information. The chapter6 “Situation Awareness In Aviation Systems” in the Handbook of Aviation Human Factors provides further insights in regard to this situational awareness knowledge challenge. Both a lack of information and too much information are a problem.

In regard to system design, the handbook chapter states:

The capabilities of the aircraft for acquiring needed information and the way in which it presents that information will have a large impact on aircrew SA [situational awareness]. While a lack of information can certainly be seen as a problem for SA, too much information poses an equal problem. Associated with improvements in the avionics capabilities of aircraft in the past few decades has been a dramatic increase in the sheer quantity of information available. Sorting through this data to derive the desired information and achieve a good picture of the overall situation is no small challenge. Overcoming this problem through better system designs that present integrated data is currently a major design goal aimed a alleviating this problem.

Further, in regard to system complexity, it states:

A major factor creating a challenge for SA is the complexity of the many systems that must be operated. There has been an explosion of avionics systems, flight management systems and other technologies on the flight deck that have greatly increased the complexity of the systems aircrew must operate. System complexity can negatively effect both pilot workload and SA through an increase in the number of system components to be managed, a high degree of interaction between these components and an increase in the dynamics or rate of change of the components. In addition, the complexity of the pilot’s tasks may increase through an increase in the number of goals, tasks and decisions to be made in regard to the aircraft systems. The more complex the systems are to operate, the greater the increase the mental workload required to achieve a given level of SA. When that demand exceeds human capabilities, SA will suffer.

In the case of the ill-fated 737 MAX, Boeing failed to achieve the right balance between providing too much and too little information to aircrew. Boeing had added the MCAS as a software solution to the hardware problem of the 737 MAX engines being located in a different position to previous 737 models. This increased the complexity of the 737 MAX systems. However, pilots hadn’t been told about the MCAS because it was believed that it would rarely be triggered, with Boeing not wanting to unnecessarily add to the complexity of pilot tasks. But because pilots didn’t know about the MCAS they didn’t know how to respond if it failed to operate correctly, which was the case in both 737 MAX crashes. Further, Boeing failed to respond to the criticism of pilots who pointed out that it hadn’t got the balance right.

These fatal failures means that aviation really isn’t the role model that medicine thought it was. This is best summed up in the recent comments of Captain Chesley “Sully” Sullenberger, the famed “Miracle on the Hudson” pilot. As we’ve seen above, Sullenberger had previously recommended that Wired author Bob Wachter visit Boeing’s headquarters as part of Wachter’s quest to see if medicine might be able to learn lessons from the aviation industry. However, an NPR news report from last month states that:

One of the nation’s best known airline pilots is speaking out on the problems with Boeing’s 737 Max jetliner. Retired Capt. Chesley “Sully” Sullenberger told a congressional subcommittee Wednesday that an automated flight control system on the 737 Max “was fatally flawed and should never have been approved.”

Sullenberger, who safely landed a damaged US Airways jet on the Hudson River in New York in 2009 after a bird strike disabled the engines, says he understands how the pilots of two 737 Max planes that recently crashed would have been confused as they struggled to maintain control of the aircraft, as an automated system erroneously began forcing the planes into nosedives.

“I can tell you firsthand that the startle factor is real and it’s huge. It absolutely interferes with one’s ability to quickly analyze the crisis and take corrective action,” he said.

Next part (part 2): Current approaches to KM aren’t adequately addressing complexity.

In the case of the ill-fated 737 MAX, Boeing didn’t achieve the right balance in the situational awareness knowledge challenge. Further, it failed to respond to the criticism of pilots who pointed out that it hadn’t got the balance right. But how could this be, when Boeing has a much-lauded knowledge management (KM) program?

Header image: Lion Air Boeing 737-MAX 8 aircraft PK-LQP, which crashed after takeoff on 29 October 2018 resulting in the loss of all 189 lives on board. Adapted from Flickr image by PK-REN, CC BY-SA 2.0.

References:

- Kapur, N., Parand, A., Soukup, T., Reader, T., & Sevdalis, N. (2015). Aviation and healthcare: a comparative review with implications for patient safety. JRSM open, 7(1), 2054270415616548. ↩

- Singh, H., Petersen, L. A., & Thomas, E. J. (2006). Understanding diagnostic errors in medicine: a lesson from aviation. BMJ Quality & Safety, 15(3), 159-164. ↩

- DDAAFS (2007). Sifting through the evidence: RAAF F-111 and AF/A-18 aircraft and crew losses. Royal Australian Air Force Directorate of Defence Aviation and Air Force Safety (DDAAFS), Canberra. ↩

- AIB (2019). Aircraft Accident Investigation Preliminary Report, Ethiopian Airlines Group B737-8 (MAX) Registered ET-AVJ. Federal Democratic Republic of Ethiopia Ministry of Transport Aircraft Accident Investigation Bureau (AIB) Report No. AI-01/19, March 2019. ↩

- Source: CBS Vision via ABC Australia. ↩

- Endsley, M. R. (1999). Situation awareness in aviation systems. In Garland, D. J., Wise, J. A., and Hopkin, V. D. (Eds.) Handbook of aviation human factors, Mahwah, NJ: Lawrence Erlbaum Associates. ↩